Certified Kubernetes Security Specialist (CKS) 온라인 연습

최종 업데이트 시간: 2025년10월04일

당신은 온라인 연습 문제를 통해 The Linux Foundation CKS 시험지식에 대해 자신이 어떻게 알고 있는지 파악한 후 시험 참가 신청 여부를 결정할 수 있다.

시험을 100% 합격하고 시험 준비 시간을 35% 절약하기를 바라며 CKS 덤프 (최신 실제 시험 문제)를 사용 선택하여 현재 최신 29개의 시험 문제와 답을 포함하십시오.

정답: A service account provides an identity for processes that run in a Pod.

When you (a human) access the cluster (for example, using kubectl), you are authenticated by the apiserver as a particular User Account (currently this is usually admin,unless your cluster administrator has customized your cluster). Processes in containers inside pods can also contact the apiserver. When they do, they are authenticated as a particular Service

Account (for example, default).

When you create a pod, if youdo not specify a service account, it is automatically assigned the default service account in the same namespace. If you get the raw json or yaml for a pod you have created (for example, kubectl get pods/<podname> -o yaml), you can see the spec.serviceAccountName field has been automatically set.

You can access the API from inside a pod using automatically mounted service account credentials, as described in Accessing the Cluster. The API permissions of the service account depend on the authorization plugin and policy in use.

In version 1.6+, you can opt out of automounting API credentials for a service account by setting automountServiceAccountToken: false on the service account: apiVersion:v1

kind:ServiceAccount

metadata:

name:build-robot

automountServiceAccountToken:false

In version 1.6+, you can also opt out of automounting API credentials for a particular pod:

apiVersion:v1

kind:Pod

metadata:

name:my-pod

spec:

serviceAccountName:build-robot

automountServiceAccountToken:false

The pod spec takes precedence over the service account if both specify a automountServiceAccountToken value.

정답: Send us your suggestion on it.

정답: [desk@cli] $ ssh worker1[worker1@cli] $apparmor_parser -q /etc/apparmor.d/nginx[worker1@cli] $aa-status | grep nginxnginx-profile-1[worker1@cli] $ logout[desk@cli] $vim nginx-deploy.yamlAdd these lines under metadata:annotations: # Add this line container.apparmor.security.beta.kubernetes.io/<container-name>: localhost/nginx-profile-1[desk@cli] $kubectl apply -f nginx-deploy.yaml

Explanation[desk@cli] $ ssh worker1[worker1@cli] $apparmor_parser -q /etc/apparmor.d/nginx[worker1@cli] $aa-status | grep nginxnginx-profile-1[worker1@cli] $ logout[desk@cli] $vim nginx-deploy.yaml

Text

Description automatically generated

정답: Fix all of thefollowing violations that were found against the API server:-

✑ a. Ensure that the RotateKubeletServerCertificate argumentissettotrue.

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component:kubelet

tier: control-plane

name: kubelet

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

+ - --feature-gates=RotateKubeletServerCertificate=true image: gcr.io/google_containers/kubelet-amd64:v1.6.0 livenessProbe:

failureThreshold: 8 httpGet:

host: 127.0.0.1

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name:kubelet

resources:

requests:

cpu: 250m

volumeMounts:

- mountPath: /etc/kubernetes/ name: k8s

readOnly: true

- mountPath: /etc/ssl/certs name: certs

- mountPath: /etc/pki name:pki hostNetwork: true volumes:

- hostPath:

path: /etc/kubernetes

name: k8s

- hostPath:

path: /etc/ssl/certs

name: certs

- hostPath: path: /etc/pki name: pki

✑ b. Ensure that theadmission control plugin PodSecurityPolicyisset.

audit: "/bin/ps -ef | grep $apiserverbin | grep -v grep"

tests:

test_items:

- flag: "--enable-admission-plugins"

compare:

op: has

value:"PodSecurityPolicy"

set: true

remediation: |

Follow the documentation and create Pod Security Policy objects as per your environment. Then, edit the API server pod specification file $apiserverconf

on themaster node and set the --enable-admission-plugins parameter to a

value that includes PodSecurityPolicy :

--enable-admission-plugins=...,PodSecurityPolicy,...

Then restart the API Server.

scored: true

✑ c. Ensure thatthe --kubelet-certificate-authority argumentissetasappropriate.

audit: "/bin/ps -ef | grep $apiserverbin | grep -v grep"

tests:

test_items:

- flag: "--kubelet-certificate-authority" set: true

remediation: |

Follow the Kubernetes documentation and setup the TLS connection between the apiserver and kubelets. Then, edit the API server pod specification file $apiserverconf on the master node and set the --kubelet-certificate-authority parameter to the path to the cert file for the certificate authority. --kubelet-certificate-authority=<ca-string>

scored: true

Fix all of the following violations that were found against the ETCD:-

✑ a. Ensurethat the --auto-tls argumentisnotsettotrue

Edit the etcd pod specification file $etcdconf on the masternode and either remove the -- auto-tls parameter or set it to false.--auto-tls=false

✑ b. Ensure that the --peer-auto-tls argumentisnotsettotrue

Edit the etcd pod specification file $etcdconf on the masternode and either remove the -- peer-auto-tls parameter or set it to false.--peer-auto-tls=false

정답: $vim /etc/falco/falco_rules.local.yaml

✑ uk.co.certification.simulator.questionpool.PList@dd92f60 $kill -1 <PID of falco>

Explanation[desk@cli] $ ssh node01[node01@cli] $ vim /etc/falco/falco_rules.yamlsearch for Container Drift Detected & paste in falco_rules.local.yaml[node01@cli] $ vim /etc/falco/falco_rules.local.yaml

- rule: Container Drift Detected (open+create)

desc: New executable created in a container due to open+create

condition: >

evt.type in (open,openat,creat) and

evt.is_open_exec=true and

container and

not runc_writing_exec_fifo and

not runc_writing_var_lib_docker and

not user_known_container_drift_activities and

evt.rawres>=0

output: >

%evt.time,%user.uid,%proc.name # Add this/Refer falco documentation priority: ERROR

[node01@cli] $ vim /etc/falco/falco.yaml

정답: Send us your Feedback on this.

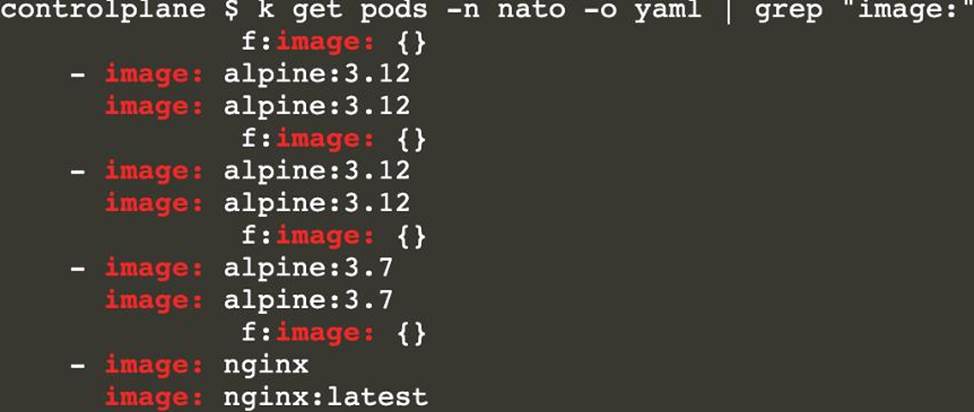

정답: [controlplane@cli] $ k get pods -n nato -o yaml | grep "image: "[controlplane@cli] $ trivy image <image-name>[controlplane@cli] $ k delete pod <vulnerable-pod> -n nato

[desk@cli] $ ssh controlnode[controlplane@cli] $ k get pods -n nato

NAME READY STATUS RESTARTS AGE

alohmora 1/1 Running 0 3m7s

c3d3 1/1 Running 0 2m54s

neon-pod 1/1 Running 0 2m11s

thor 1/1 Running 0 58s

[controlplane@cli] $ k get pods -n nato -o yaml | grep "image: "

Text

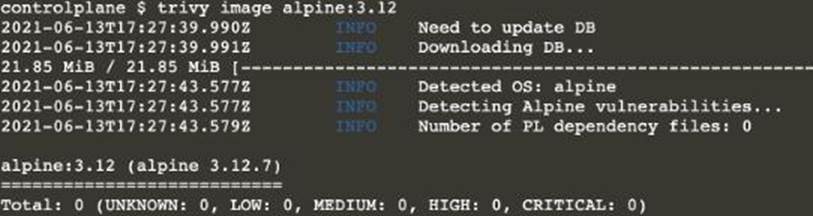

Description automatically generated[controlplane@cli] $ trivy image <image-name>

Text

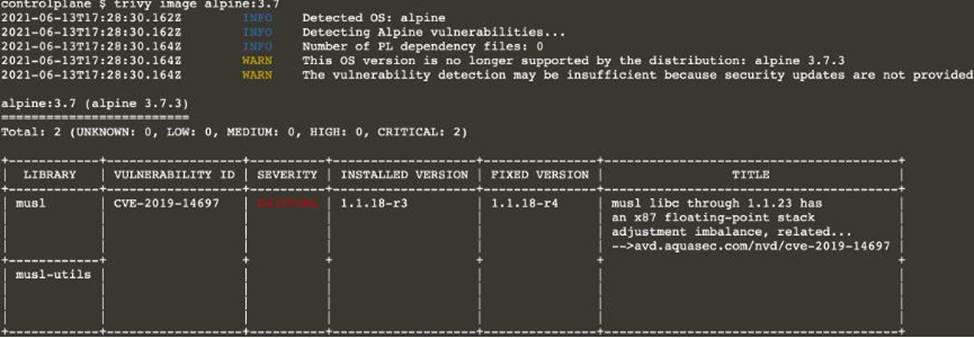

Description automatically generated

Text

Description automatically generated

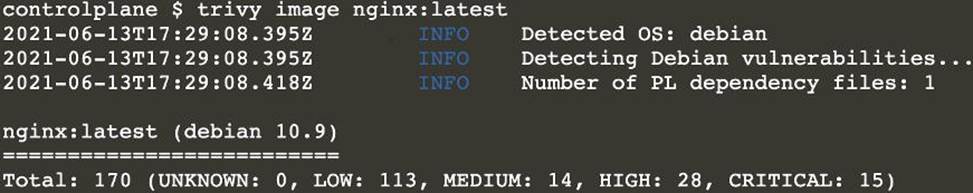

Text

Description automatically generatedNote: As there are 2 images have vulnerability with severity Hight & Critical. Delete containers for nginx:latest & alpine:3.7 [controlplane@cli] $ k delete pod thor -n nato

정답: k get pods -n prodk get pod <pod-name> -n prod -o yaml | grep -E 'privileged|ReadOnlyRootFileSystem'Delete the pods which do have any of these 2 propertiesprivileged:true or ReadOnlyRootFileSystem: false

[desk@cli]$ k get pods -n prod

NAME READY STATUS RESTARTS AGE

cms 1/1 Running 0 68m

db 1/1 Running 0 4m

nginx 1/1 Running 0 23m

[desk@cli]$ k get pod nginx -n prod -o yaml | grep -E 'privileged|RootFileSystem' {"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"creationTimestamp":null,"label s":{"run":"nginx"},"name":"nginx","namespace":"prod"},"spec":{"containers":[{"image":"nginx ","name":"nginx","resources":{},"securityContext":{"privileged":true }}],"dnsPolicy":"ClusterFirst","restartPolicy":"Always"},"status":{}}f:privileged: {}privileged:

true

![]()

[desk@cli]$ k delete pod nginx -n prod

[desk@cli]$ k get pod db -n prod -o yaml | grep -E 'privileged|RootFilesystem'

![]()

[desk@cli]$ k get pod cms -n prod -o yaml | grep -E 'privileged|RootFilesystem'

![]()

정답: Send us your feedback on it.

정답: To add a Kubernetes cluster to your project, group, or instance:

✑ Navigate to your:

✑ Click Add Kubernetes cluster.

✑ Click the Add existing cluster tab and fill in the details:

Get the API URL by running this command:

kubectl cluster-info | grep-E'Kubernetes master|Kubernetes control plane'| awk'/http/ {print $NF}'

✑ uk.co.certification.simulator.questionpool.PList@dd80600

kubectl get secret <secret name>-ojsonpath="{['data']['ca\.crt']}"